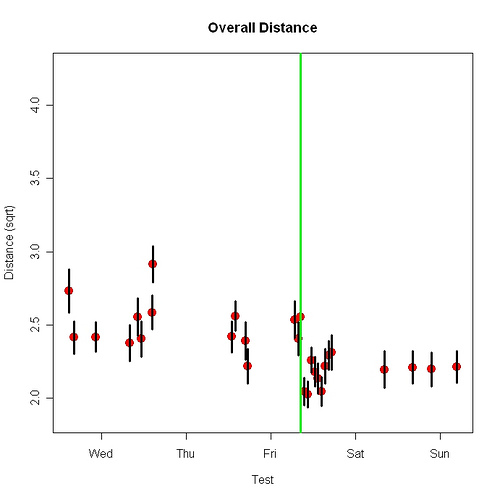

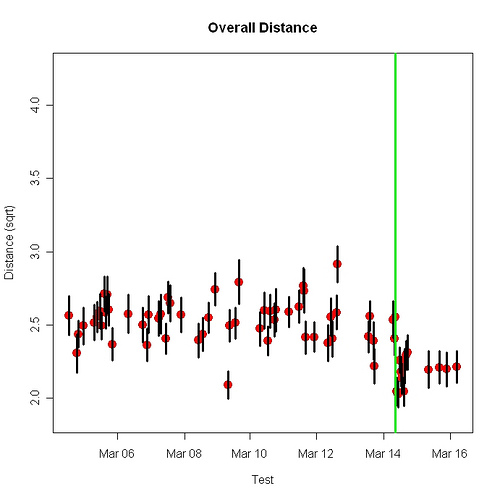

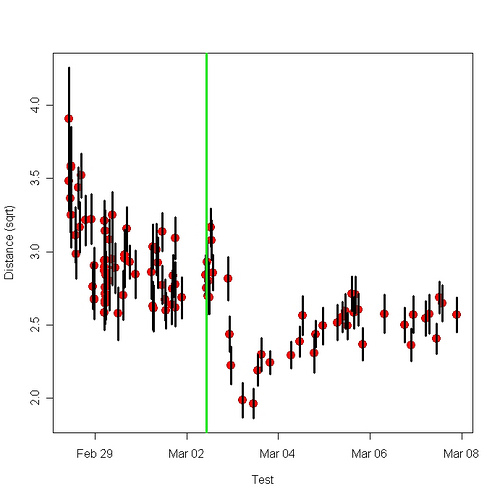

The results I described in the previous post surprised me because (a) my performance suddenly got better after being stable for many tests and (b) after the improvement, further practice appeared to make my performance worse. I’d never before seen either result in a motor learning situation. If you can think of an explanation of the result that practice makes performance worse, and animal learning isn’t your research area, please let me know.

Learning researchers used to think of associative learning as a kind of stamping-in process. The more you experience A and B together, the stronger the association between them. Simple as that. In the 1960s, however, several results called this idea into question. Situations that should have caused learning did not. The feature that united the various results was that in each case, learning didn’t happen when the animal already expected the second event. If A and B occur together, and you already expect B, there is no learning. Theories that explained these findings — the Rescorla-Wagner model is the best known, but the Pearce-Hall model is the one that appears to be correct — took the discrepancy between expected and observed — an event’s “surprise factor” — rather than simply the event itself, to be what causes learning. We are constantly trying to predict the future; only when we fail do we learn.

In my motor-learning task, imagine that the brain “expects” a certain accuracy. When actual accuracy is less, performance improves. Performance stops improving when actual accuracy equals expected accuracy. The effect of more omega-3 in the blood, and therefore the brain, was to increase expected accuracy. (One of the main things the brain does is learn. If we do something that improves brain performance in other ways, it is plausible that it will also improve learning ability.) Thus the sudden improvement. The decrement in accuracy with further practice came about because, when the omega-3 concentration went down, actual accuracy was better than expected accuracy. Accuracy was “over-predicted,” a learning theorist might say. So the observed change in performance was in the opposite-from-usual direction. Accuracy got worse, not better.

Related happiness research. “Christensen’s study was called “Why Danes Are Smug,” and essentially his answer was it’s because they’re so glum and get happy when things turn out not quite as badly as they expected.”